Our Vision Statement

Mental health disorders are humanity's most critical health challenge, bringing immense suffering to millions of patients and their caregivers, and consuming 4% of global GDP – US$ 5 trillion every year globally. Yet, we treat and monitor these conditions exactly the same way as we did decades ago. In an era of rising illness prevalence and increasing clinician shortage, it’s time for a radical change.

The Measurement Gap

Mental health assessment today is like examining a complex painting through a keyhole. Clinicians make life-altering decisions based on brief, sporadic consultations where they synthetize patients’ subjective complaints with their own observations. The result? Only 7% of patients suffering from mental illnesses receive effective treatments.

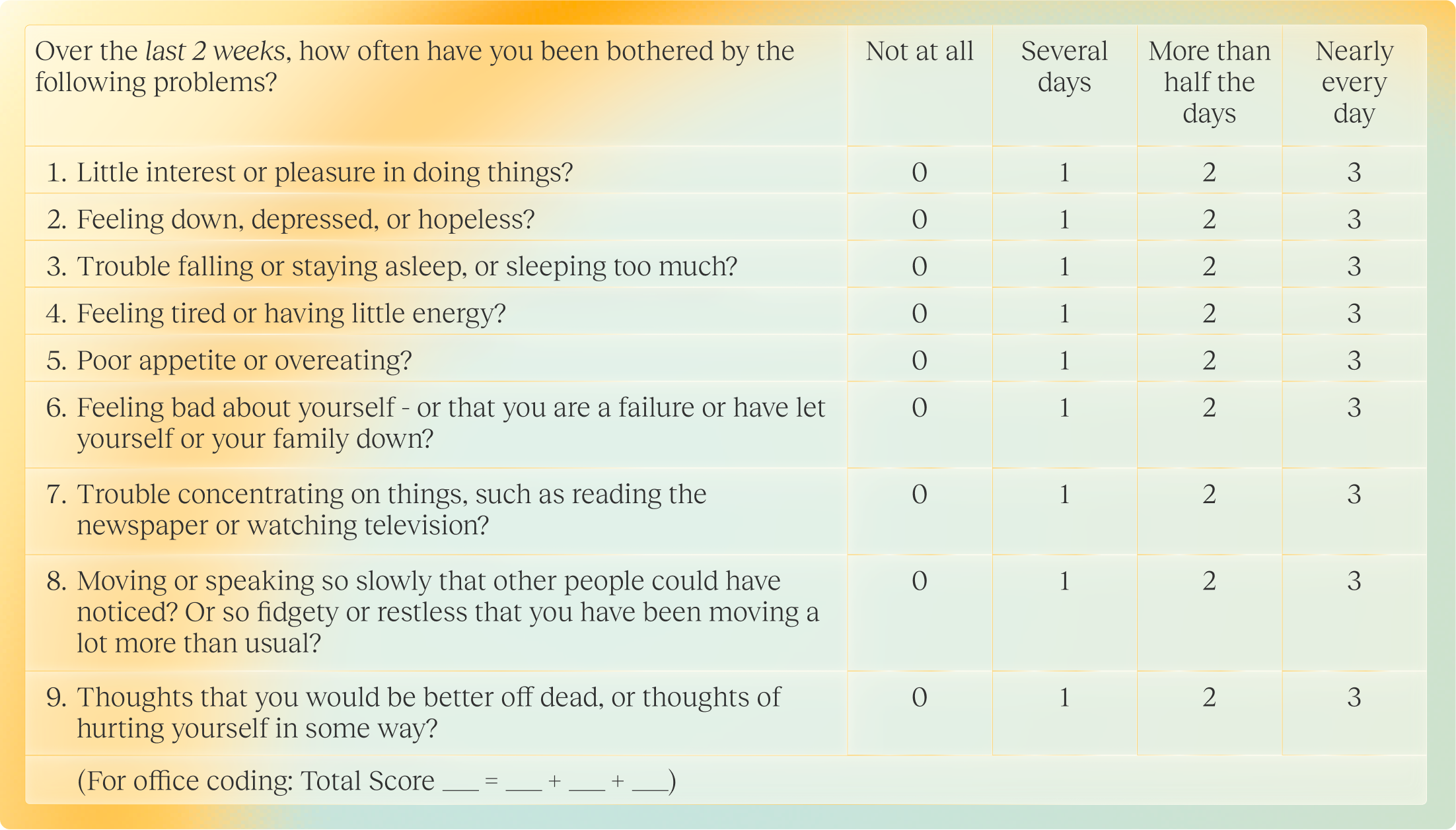

The solution is clear: measurement-based care, at scale. When clinicians use standardized assessments, outcomes improve dramatically – patients with severe depression achieve remission three times more frequently and recover twice as fast.

Yet, current solutions fall short: clinician-administered questionnaires require 30+ minutes to complete, making them impractical in routine settings. Patient self-reporting tools have their limits too. A real-world study by Limbic reveals that only 19% of patients continue using mental health apps beyond six weeks. This poor engagement stems from motivational deficits inherent to many mental health conditions. Meanwhile, anosognosia – patients’ clinical inability to recognize their own illness – further compromises accurate reporting.

Why traditional AI failed mental healthcare

Traditional AI isn’t built for the messy reality of mental health: medical data is a labyrinth of unstructured medical data, and psychiatric symptoms are subtle, shifting patterns of behaviors, emotions, and thoughts that defy simple labelling.

Existing AI tools usually try to predict 1 or 2 symptoms from isolated signals like voice, sleep, or movement. However, these siloed models miss the broader clinical picture and crumble in non-controlled environments. Attempting to make those models more robust – like adding a new modality – means starting from scratch and retraining the entire system with new labeled datasets.

The limitations of existing AI models and standardized questionnaires explain why measurement-based care is not yet the norm in psychiatry, despite their clear clinical benefits. It’s also why clinicians still rely on the same outdated tech stack: a basic calendar and a clunky patient health record.

The Dawn of Foundation Models

Foundation models represent a paradigm shift in machine learning. These systems first undergo pre-training on vast unlabeled datasets and are then adapted to specific tasks through fine-tuning with limited labeled data. For example, Large Language Models (LLMs) are initially trained to predict sequences of words, and then adapted for countless applications, like coding or poem writing. We discovered that training them for one task often enhances their performance across others and even unlocks new capabilities they weren’t designed for. Today’s LLMs now consistently outperform traditional AI approaches across most applications.

Foundation models unlock unprecedented potential for psychiatry. First, they are robust to ultra-heterogenous clinical pictures thanks to their generalization capabilities. Second, they can comprehensively assess patient behaviors by learning across heterogenous datasets with imperfect labelling or structure. Psychiatry’s complex medical data, which was once seen as a computational liability, now becomes a rich source of highly contextualized medical insights.

Speech emerges as the ideal modality for psychiatry. It is the primary medium of psychiatric evaluation. Clinicians analyze what their patients say – when reporting their own symptoms and look for potential relapse risks in the way they speak, such as speech disorganization or pressured speech. While powerful audio foundation models like Phi-4 (Microsoft) and Qwen2.5 (Alibaba) display remarkable capabilities, they fall short on medical use cases due to the scarcity of labelled clinical data. The technology is here; we just lack the adequate clinical data to make foundational models a reality for psychiatry.

The 1st AI Copilot for mental health clinicians

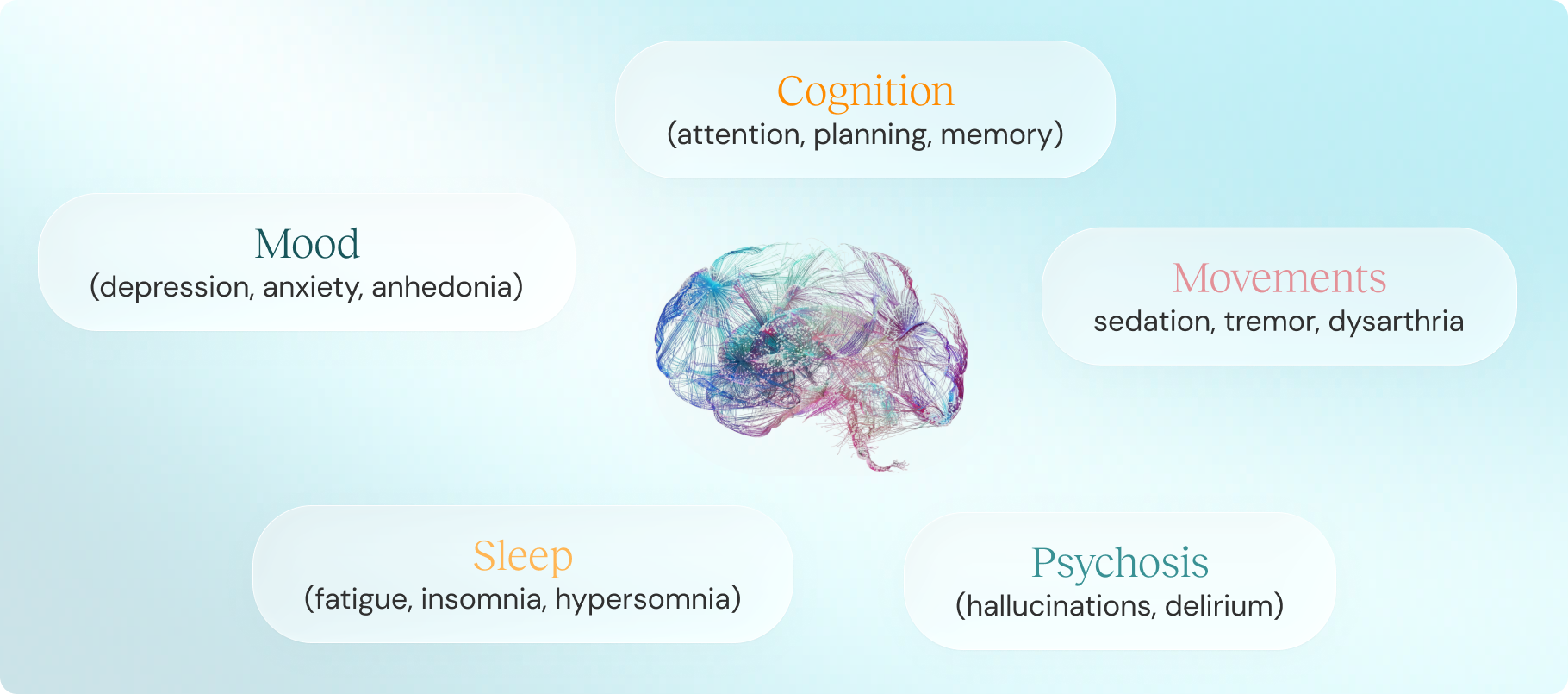

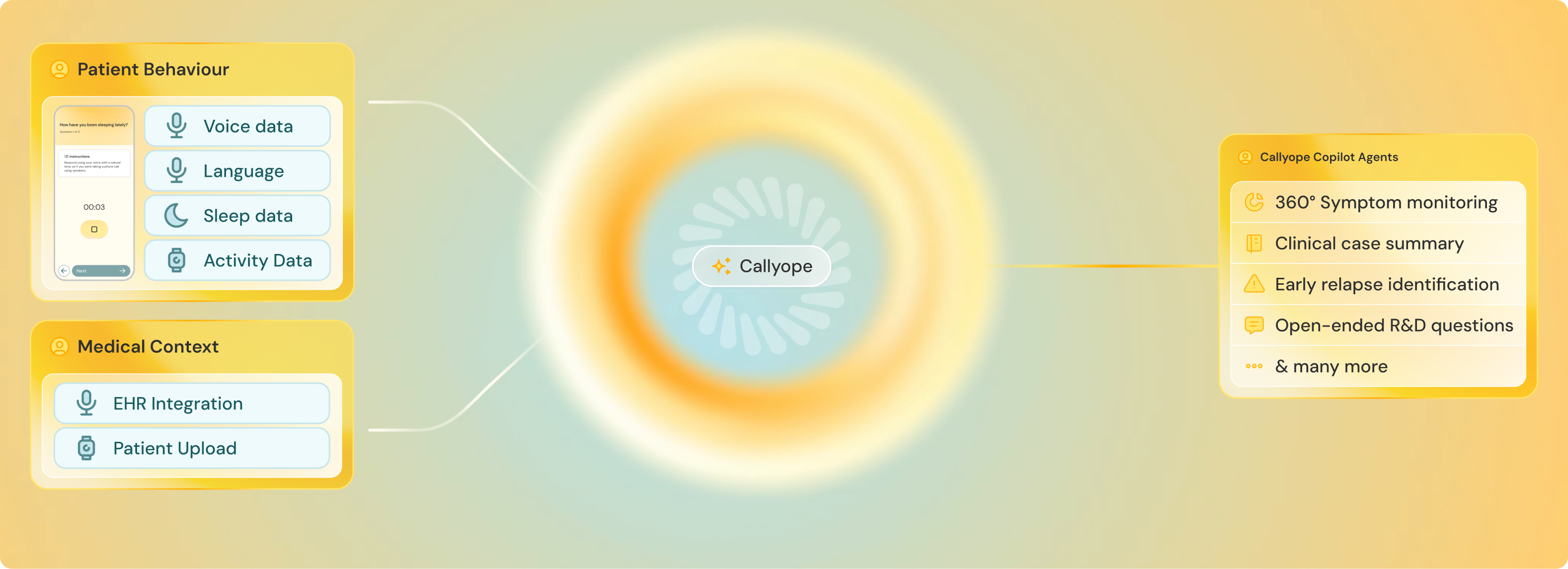

Callyope is creating the first Audio-Language Foundation Model engineered specifically for psychiatry. Through ambitious clinical trials and research collaborations, we are constructing the world’s most comprehensive mental health dataset. Our model transcends traditional boundaries, integrating multiple modalities like speech, smartphone sensors, wearables and EHR data, and covering the continuum of various conditions like depression, bipolar disorders and schizophrenia.

This Foundation Model can provide in-depth, contextual behavioral analysis. Designed with flexibility at its core, it can be specialized into various AI agents – all accessible through Callyope Copilot, the first AI copilot for mental health practitioners.

Callyope Copilot will augment clinicians similarly to how GitHub Copilot enhances programmers' capabilities. Clinicians will interact with medical data through natural, open-ended queries. Imagine asking “did this patient try this treatment? If so, why was it discontinued?” and receive actionable medical insights via validated, foundation model-powered Software-as-a-Medical (SaMD) solutions.

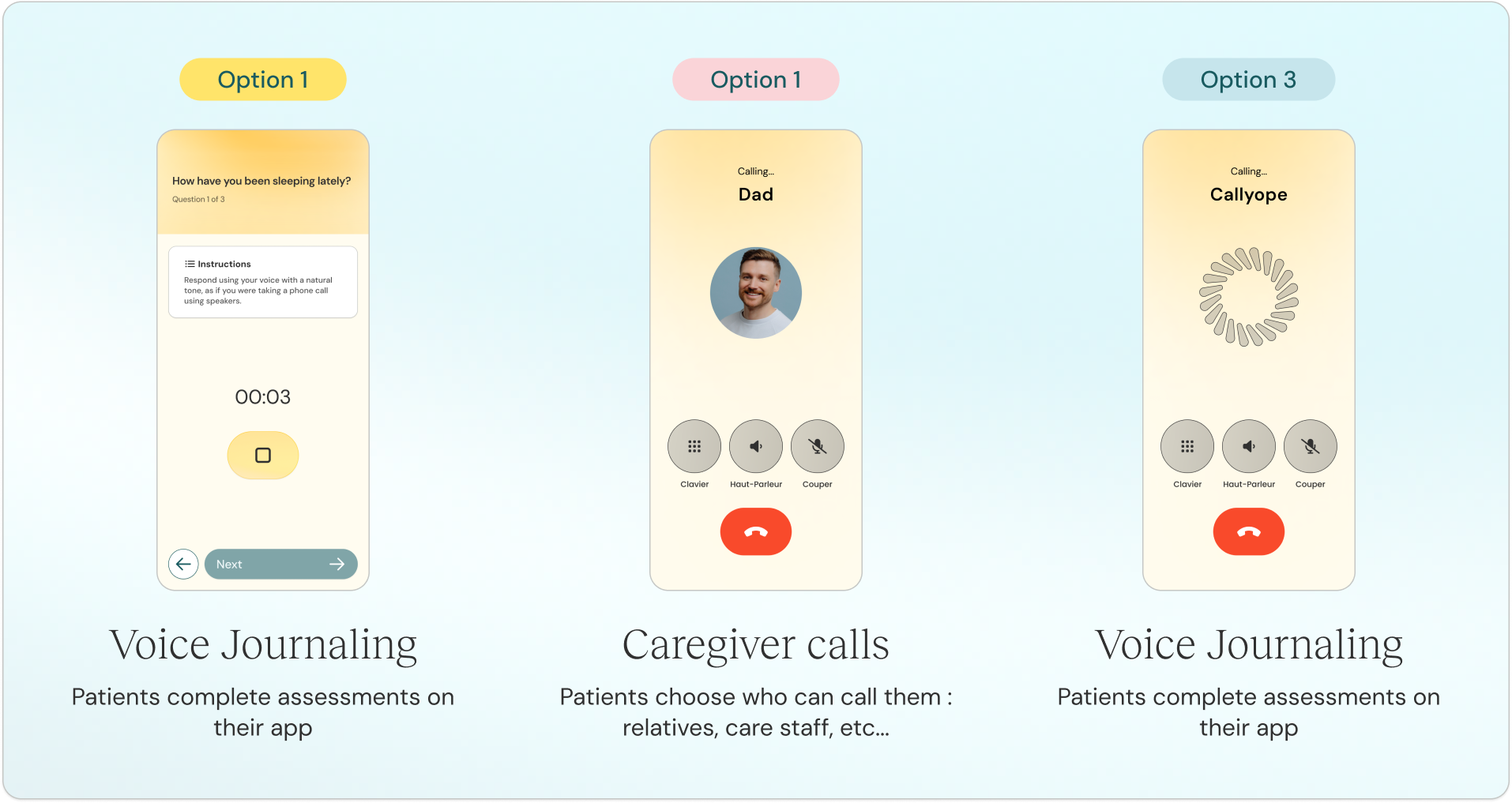

For patients, we envision a technology that approaches zero interface — where data collection becomes so seamless and unobtrusive that monitoring feels natural, not invasive. Our patient engagement philosophy is radical: minimal burden, maximum agency. Patients retain granular control over data sharing, choosing between occasional symptom self-reporting, voice journaling or passive speech assessment, selecting which phone conversations to analyze, etc.

We are creating a mental healthcare ecosystem that prioritises proactive management over crisis response. Where subtle behavioural shifts trigger timely interventions. Where treatment decisions stem from objective data rather than trial-and-error approaches. Where patients become active participants of their care. Where clinicians spend less time on documentation and more on human connection.

The future of mental health lies with foundation models and AI agents built for psychiatry. That future begins with Callyope.